The Road to Adaptive AI

The fusion of Neural Architecture Search (NAS) and Continual Learning (CL) led me into a rabbit-hole where AI’s adaptability and autonomy are pushed beyond traditional boundaries. The intersection of these two domains is not just another merge of two disjoint areas, it’s a potential paradigm shift to how we approach AI development.

Imagine an AI that evolves, learns continually, and adapts without human intervention, similar to living organisms adapting to their environment. A deep dive into NAS and CL reveals a future where AI systems can autonomously refine their architectures, ensuring they remain optimal as they encounter new tasks or data. It’s analagous to a human child growing, learning, and adapting to the world around them, but at an accelerated pace. We have formalised these frameworks as Continual Neural Architecture Search (CNAS).

Where Neural Architecture Search Meets *Continual Learning *

CNAS frameworks begin with NAS, a technique that automates the design of neural network architectures. Traditional methodologies where tweaking layers and parameters are a fundamental, tiresome process could be soon behind us. NAS introduces methodologies where AI designs its successors, iteratively optimised over generations.

The second half of CNAS is where it truly shines; Continual Learning capabilities. CL approaches allow these AI systems to learn from new data without forgetting previous knowledge, a common pitfall known as catastrophic forgetting.

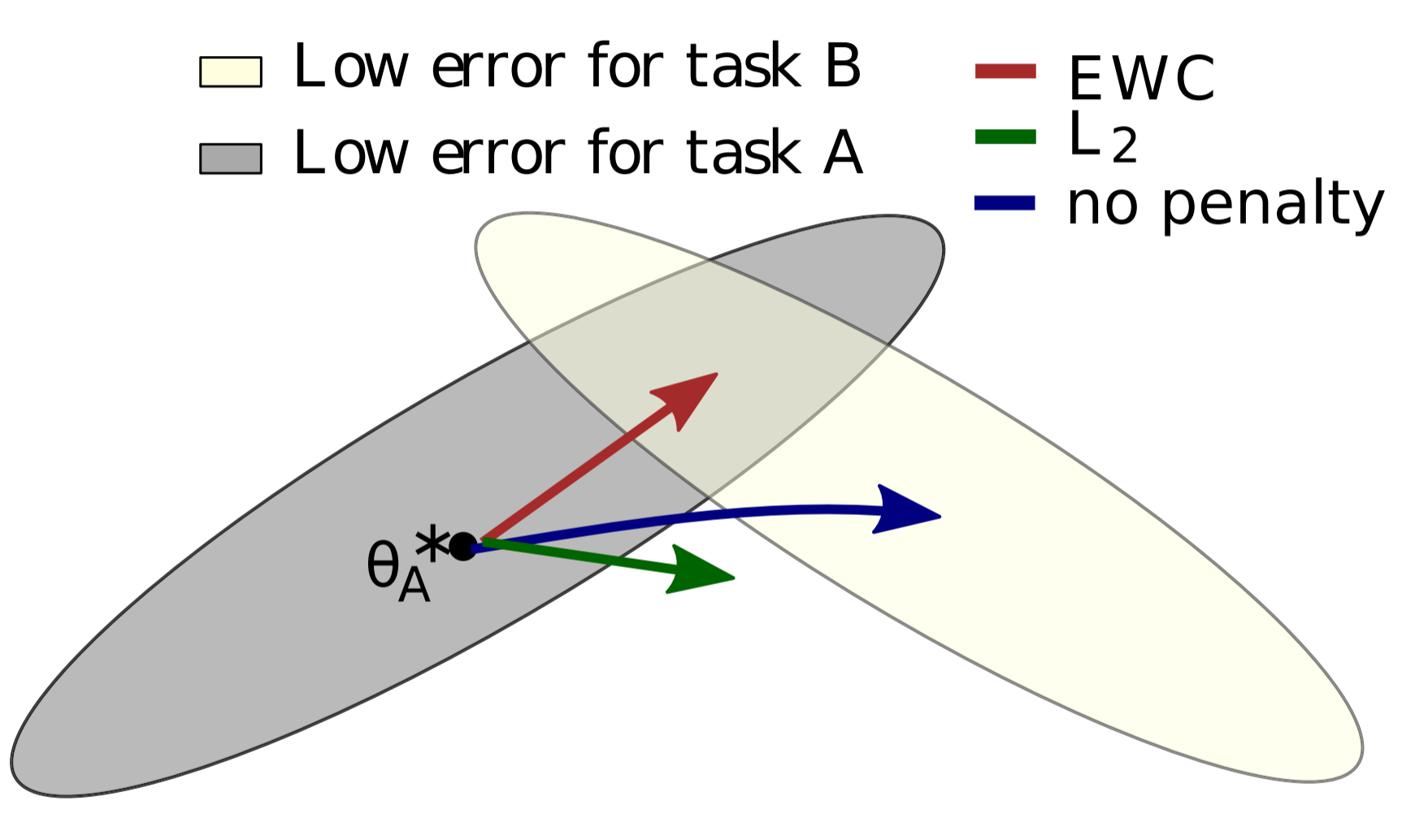

By creating NAS / CL hybrid frameworks, we unlock the potential for AI systems that are not only self-improving, but also adaptable to new challenges in a domain-agnostic manner. One popular Continual Learning approach is Elastic Weight Consolidation (EWC). EWC works by finding the intersection of “good solutions” for two tasks in their solution spaces.

Furthermore, these systems could also potentially generalise learned knowledge to new, unforeseen tasks. For instance, when humans learn to perform a task, such as learning to ride a bicycle, they can generalise their learned knowledge over to similar tasks, like riding a motorcycle.

How CNAS Overcomes the Caveats of Traditional Methodologies

The current, widely adopted methodologies in Deep Learning are simply optimised for a single task with strict boundaries. When the data sources shift ever so slightly, we typically experience some form of Data Drift. There are a few types of drift that can occur in a deep learning problem, but they typically all have the same result; an abrupt drop in model performance.

Machine Learning Engineers are expected to tune models consistently as the datasets’ underlying distributions change. With the adoption of CNAS frameworks, we:

- Ensure scalability through large scale autonomous optimisation

- Improve model reliability and overcome Data Drift

- Build more optimal models autonomously

- Democratise AI for non-expert usage

This fusion also addresses a critical challenge in AI: the resource-intensive nature of training and maintaining models. With NAS and CL working hand in hand, AI can self-optimise in a more resource-efficient manner, potentially making powerful AI tools more accessible and sustainable.

Looking Ahead

By leveraging and combining approaches from Neural Architecture Search and Continual Learning, more robust and adaptive agents can be developed. Through self-development and lifelong plasticity, CNAS aims to overcome numerous limitations posed by human factors and result in more optimal models.

In doing so, Continual Neural Architecture Search could potentially introduce a new spectrum of applications where the functionality of the model

is pliable, even after the deployment phase when remote access is often limited or unavailable.

The implications of this are vast and varied, from autonomous vehicles that adapt to new driving conditions in real-time, to personalised AI tutors that evolve with a student’s learning pace. The possibilities are as limitless as they are exciting.

Thanks for reading, and stay tuned for more updates on our journey with CNAS! 🌌